Introducing Prompt Variants

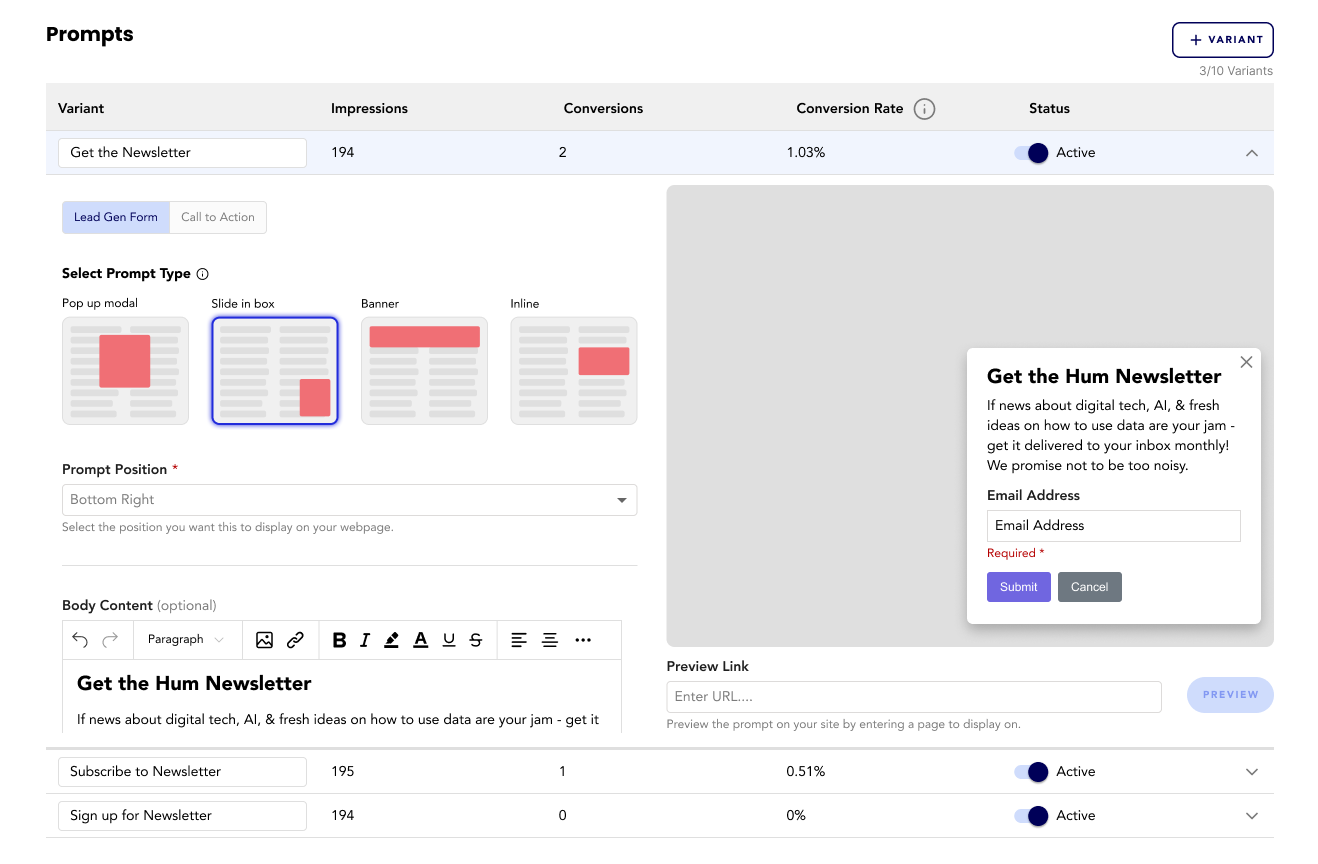

Live Engagement Campaign has expanded to create multiple variations of prompts to see which is most engaging with your audience.

How it Works

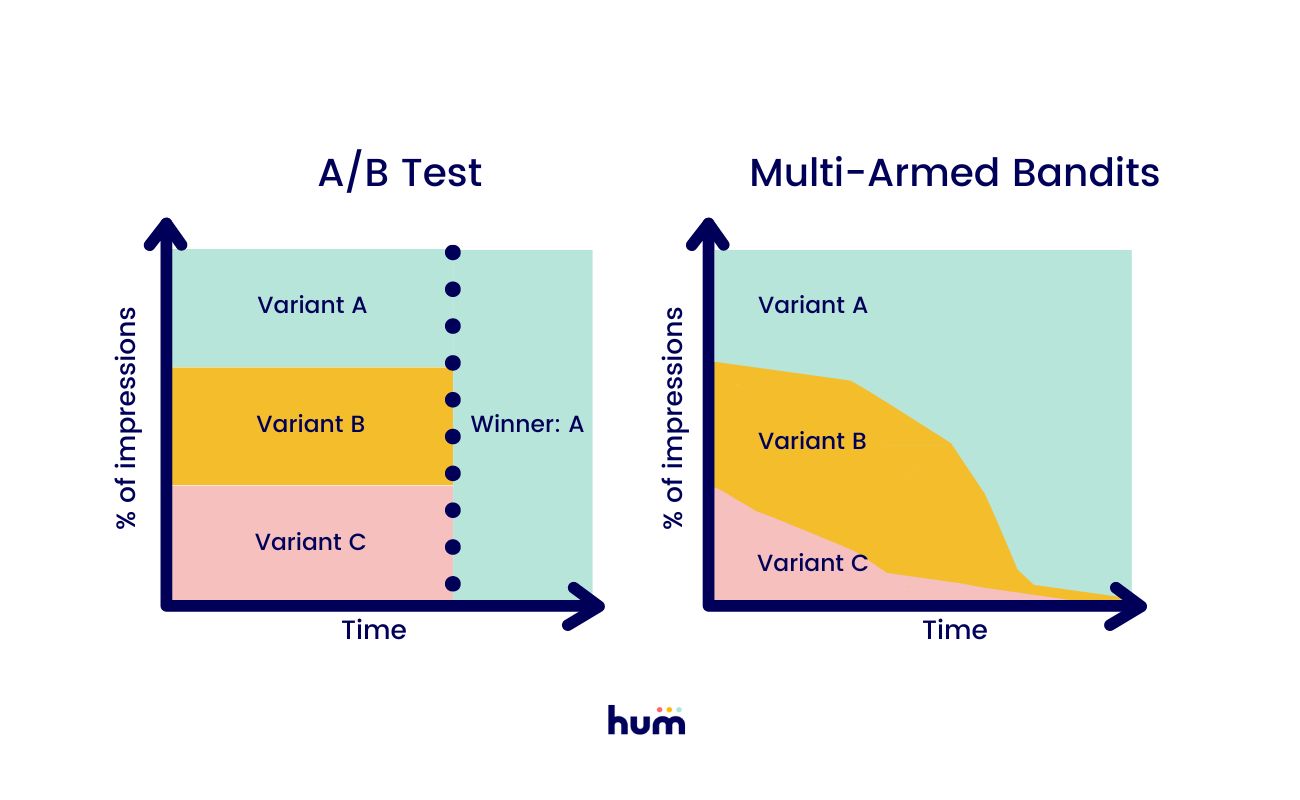

Hum leverages Multi-armed Bandit Testing rather than traditional A/B testing for delivering campaigns with variants. This mechanism works by dynamically assigning more traffic to the highest performing variants, rather than distributing it evenly as in A/B testing. It enables quicker adaptation, reduces the chance of settling on ineffective options, and ultimately maximize results from your campaigns. Read more in our blog post.

Best Practices

Make 1 change per variant. This helps to test a clear variable between your variants in a campaign.

Try to avoid making changes to live variants. Once a variant is live, making changes will skew your results and be less meaningful.

Make sure you have a large enough target segment. If you aren’t targeting enough users, you may not get enough impressions for any statistically significant results. There is no magic number for the minimum target audience but we recommend at least 1 thousand profiles per variant.

Allow the campaign enough time to run. If you end the campaign early, you may not get enough impressions for any statistically significant results. There is no magic run time but we recommend running the campaign for at least 2 weeks.

Prioritize significant changes. While it is essential to test one change at a time, ensure that the changes you test are significant enough to influence user behavior. Minor modifications may not generate noticeable differences or valuable insights. Focus on changes that have the potential to drive substantial improvements.

FAQ’s

Q: What is multi-armed bandit testing?

A: Multi-armed bandit testing is a statistical method used to optimize resource allocation in experiments by dynamically allocating more resources to the best-performing variants.

Q: How does multi-armed bandit testing differ from traditional A/B testing?

A: In traditional A/B testing, traffic is split evenly between variants, while multi-armed bandit testing dynamically allocates traffic based on variant performance, allowing for more efficient and faster experiments.

Q: What are the key benefits of using multi-armed bandit testing?

A: The key benefits of multi-armed bandit testing include faster optimization, improved efficiency in resource allocation, and the ability to balance exploration (testing new variations) and exploitation (capitalizing on the winning variation). This leads to higher conversion rates and better overall performance of marketing campaigns.

Q: How does multi-armed bandit testing help in discovering the best-performing variation?

A: Multi-armed bandit testing constantly learns and adapts based on real-time performance data, allowing for quicker identification of the best-performing variation. It optimizes traffic allocation towards the winning arm, maximizing the overall conversion rate.

Q: How does the algorithm behind multi-armed bandit testing work?

A: Multi-armed bandit algorithms balance exploration (testing new variants) and exploitation (using the best-performing variants) based on statistical models, probability distributions, and adaptive strategies.

Q: How is a winner determined in multi-armed bandit testing?

A: In multi-armed bandit testing, the winner variant is determined based on statistical significance and performance. The algorithm monitors the performance of different variants over time and updates probability distributions. As more data is collected, the algorithm becomes more confident in identifying the best-performing variant. The winner variant is typically the one with the highest observed conversion rate or performance metric, while taking into account the statistical certainty associated with the results.

Q: Are there any potential drawbacks or limitations of multi-armed bandit testing?

A: The main limitation is the need for continuous traffic to provide accurate results. Initial exploration may have a short-term negative impact on performance, and certain scenarios may require traditional A/B testing instead.

Q: How long does the variant test run?

A: The length of the campaign.

Q: Is there a limit to how many variants I can create?

A: 10 Active Variants. Inactive variants are not counted toward the variant limit.

Q: Can I disable a variant that isn’t performing well?

A: Yes, you can disable any variant by using the status toggle.

Q: Can I make more than 1 change to a variant?

A: Yes, while we only recommend changing one aspect (Like different headlines), you are not limited in the number of changes you make to each variant.